One of the tasks of an architect who is implementing a data warehouse is to create the date dimensions. Because these dimensions have no source system, they are often created in something like Excel and then inserted directly into the dimension tables themselves. Searching the internet will probably reveal some code that will automatically create these dimensions. I've created one such PL/SQL block here that will create three date dimensions, a daily, monthly, and yearly grain. Because this will probably only be run once, there is no error handling nor is there a way to add to the date dimension (it will only create from scratch). Feel free and add those features if you so desire. Here is an explanation of the parameters:

1.) p_begin_year - This is the first year of the range of dates that you wish to create

2.) p_end_year - This is the last year of the range of dates that you wish to create

3.) p_create_tables - This is intended to have a value of YES or NO. Set it to YES if you want the tables to be created and populated. Set it to no if you only want the tables to be populated (e.g., they have already been created).

4.) p_include_fiscal_year - If you would like some data related to your fiscal year in the dim_day and dim_month tables, then set this value to YES. Otherwise, set it to NO.

5.) p_fiscal_year_begin_month_no - If the answer to #4 is YES, then set this to the calendar month number that is the first month in your fiscal year. In other words, if your fiscal year begins in July, set this to 7. If it begins in October, set it to 10, etc.

If nothing else, this will be a starting point for you with regards to creating a date dimension. Examine the code, tweak it, etc.

Note: This has been written in PL/SQL, meaning that it will only run in an Oracle database. If you would like to do this with a system from another database vendor, similar scripts can be written in that vendor's native language.

declare

p_begin_year int := 2001;

p_end_year int := 2014;

p_create_tables varchar2(3) := 'YES';

p_include_fiscal_year varchar2(3) := 'YES';

p_fiscal_year_begin_month_no int := 7;

d_first_day date;

d_last_day date;

d_loop_day date;

i_counter int;

begin

if upper(p_create_tables) = 'YES' then

EXECUTE IMMEDIATE 'CREATE TABLE DIM_DAY '

|| '( '

|| 'KEY_DAY INT, '

|| 'CALENDAR_DAY DATE, '

|| 'CALENDAR_MONTH_NUMBER INT, '

|| 'MONTH_NAME VARCHAR2(50), '

|| 'CALENDAR_QUARTER INT, '

|| 'CALENDAR_YEAR INT '

|| ')';

end if;

select to_date('01-JAN-' || p_begin_year, 'DD-MON-YYYY') into d_first_day from dual;

select to_date('31-DEC-' || p_end_year, 'DD-MON-YYYY') into d_last_day from dual;

d_loop_day := d_first_day;

i_counter := 1;

while d_loop_day <= d_last_day

loop

EXECUTE IMMEDIATE 'insert into dim_day (key_day, calendar_day, calendar_month_number, month_name, calendar_quarter, calendar_year) '

|| 'select ' || i_counter || ', '

|| '''' || to_char(d_loop_day, 'DD-MON-YYYY') || ''', '

|| 'extract(month from to_date(''' || d_loop_day || ''', ''DD-MON-YYYY'')),'

|| 'to_char(to_date(''' || d_loop_day || ''', ''DD-MON-YY''), ''MONTH''), '

|| 'case when extract(month from to_date(''' || d_loop_day || ''', ''DD-MON-YY'')) between 1 and 3 then 1 '

|| 'when extract(month from to_date(''' || d_loop_day || ''', ''DD-MON-YY'')) between 4 and 6 then 2 '

|| 'when extract(month from to_date(''' || d_loop_day || ''', ''DD-MON-YY'')) between 7 and 9 then 3 '

|| 'when extract(month from to_date(''' || d_loop_day || ''', ''DD-MON-YY'')) between 10 and 12 then 4 '

|| 'else -1 '

|| 'end, '

|| 'extract(year from to_date(''' || d_loop_day || ''', ''DD-MON-YY'')) '

|| 'from dual ';

i_counter := i_counter + 1;

d_loop_day := trunc(d_loop_day) + 1;

end loop;

commit;

if p_include_fiscal_year = 'YES' then

if p_create_tables = 'YES' then

EXECUTE IMMEDIATE 'alter table dim_day '

|| 'add '

|| '( '

|| 'FISCAL_MONTH_NUMBER INT, '

|| 'FISCAL_QUARTER INT, '

|| 'FISCAL_YEAR INT '

|| ') ';

end if;

EXECUTE IMMEDIATE 'update dim_day o '

|| 'set (o.fiscal_month_number, o.fiscal_year) = '

|| '( '

|| 'select case when i.calendar_month_number - ' || p_fiscal_year_begin_month_no || ' >= 0 then '

|| '(i.calendar_month_number - ' || p_fiscal_year_begin_month_no || ') + 1 '

|| 'else '

|| '12 + (i.calendar_month_number - ' || p_fiscal_year_begin_month_no || ') + 1 '

|| 'end, '

|| 'case when i.calendar_month_number - ' || p_fiscal_year_begin_month_no || ' >= 0 then '

|| 'i.calendar_year '

|| 'else '

|| 'i.calendar_year + 1 '

|| 'end '

|| 'from dim_day i '

|| 'where i.calendar_day = o.calendar_day '

|| ') ';

EXECUTE IMMEDIATE 'update dim_day o '

|| 'set o.fiscal_quarter = '

|| '( '

|| 'select case when i.fiscal_month_number in (1,2,3) then 1 '

|| 'when i.fiscal_month_number in (4,5,6) then 2 '

|| 'when i.fiscal_month_number in (7,8,9) then 3 '

|| 'when i.fiscal_month_number in (10,11,12) then 4 '

|| 'else -1 '

|| 'end '

|| 'from dim_day i '

|| 'where i.calendar_day = o.calendar_day '

|| ') ';

commit;

end if;

if upper(p_create_tables) = 'YES' then

if p_include_fiscal_year = 'YES' then

EXECUTE IMMEDIATE 'CREATE TABLE DIM_MONTH '

|| '( '

|| 'KEY_MONTH INT, '

|| 'CALENDAR_MONTH_NUMBER INT, '

|| 'CALENDAR_MONTH_NAME VARCHAR2(20), '

|| 'CALENDAR_QUARTER_NUMBER INT, '

|| 'CALENDAR_YEAR INT, '

|| 'FISCAL_MONTH_NUMBER INT, '

|| 'FISCAL_QUARTER_NUMBER INT, '

|| 'FISCAL_YEAR INT, '

|| 'FIRST_DAY DATE, '

|| 'LAST_DAY DATE '

|| ') ';

else

EXECUTE IMMEDIATE 'CREATE TABLE DIM_MONTH '

|| '( '

|| 'KEY_MONTH INT, '

|| 'CALENDAR_MONTH_NUMBER INT, '

|| 'CALENDAR_MONTH_NAME VARCHAR2(20), '

|| 'CALENDAR_QUARTER_NUMBER INT, '

|| 'CALENDAR_YEAR INT, '

|| 'FIRST_DAY DATE, '

|| 'LAST_DAY DATE '

|| ') ';

end if;

end if;

if p_include_fiscal_year = 'YES' then

EXECUTE IMMEDIATE 'insert into dim_month '

|| 'select rownum, a.* '

|| 'from '

|| '( '

|| 'select distinct calendar_month_number, '

|| 'month_name, '

|| 'calendar_quarter, '

|| 'calendar_year, '

|| 'fiscal_month_number, '

|| 'fiscal_quarter, '

|| 'fiscal_year, '

|| 'trunc(calendar_day,''month''), '

|| 'last_day(calendar_day) '

|| 'from dim_day '

|| 'order by calendar_year, '

|| 'calendar_month_number '

|| ') a ';

commit;

else

EXECUTE IMMEDIATE 'insert into dim_month '

|| 'select rownum, a.* '

|| 'from '

|| '( '

|| 'select distinct calendar_month_number, '

|| 'month_name, '

|| 'calendar_quarter, '

|| 'calendar_year, '

|| 'first_day(calendar_day), '

|| 'last_day(calendar_day) '

|| 'from dim_day '

|| ') '

|| 'order by a.calendar_year, '

|| 'a.calendar_month_number ';

commit;

end if;

if upper(p_create_tables) = 'YES' then

EXECUTE IMMEDIATE 'CREATE TABLE DIM_CALENDAR_YEAR '

|| '( '

|| 'KEY_CALENDAR_YEAR NUMBER, '

|| 'CALENDAR_YEAR NUMBER, '

|| 'FIRST_DAY DATE, '

|| 'LAST_DAY DATE '

|| ') ';

end if;

EXECUTE IMMEDIATE 'insert into dim_calendar_year '

|| 'select rownum, a.* '

|| 'from '

|| '( '

|| 'select distinct calendar_year, '

|| '''01-JAN-'' || calendar_year || '''', '

|| '''31-DEC-'' || calendar_year || '''' '

|| 'from dim_month '

|| 'order by calendar_year '

|| ') a ';

commit;

end;

Image courtesy of Anusorn P nachol / FreeDigitalPhotos.net

Showing posts with label Hands-on Practice. Show all posts

Showing posts with label Hands-on Practice. Show all posts

Friday, April 18, 2014

Friday, September 7, 2012

ETL - Practice Loading A Fact - Solution

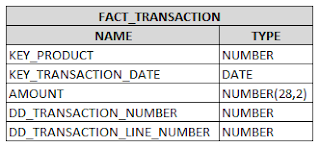

In a prior post we looked at the process by which a fact table is loaded. I need to apologize, since the ERD of the FACT_TRANSACTION table that I posted in that prior post was incorrect. It has since been updated. The four things that an ETL job needs to accomplish are...

1.) Select all necessary data from the source

a. Measures

b. Natural Keys of the dimension objects

c. Degenerate Dimension objects

2.) Transform the data as needed

a. Aggregate measures and group by the appropriate objects

b. Add foreign keys to dimension tables

3.) Delete any existing rows from the fact table that will be replaced by the new data

4.) Load the fact table

Consider this PL/SQL procedure as a procedure that will accomplish these things.

Extract

The STAGE_TRANSACTION_DATA table pulls all of the necessary elements from the source system into the staging area. I am treating one schema as both the staging area and the presentation area. This is probably unrealistic in a production environment but it keeps things simple for these purposes.

Transform

1.) The STAGE_TRANS_DATA_PROD_KEY table is used to grab the KEY_PRODUCT from the DIM_PRODUCT dimension. Notice that this is done by joining the product's natural key, which is the PRODUCT_NO (product number), to the DIM_PRODUCT table. An outerjoin is used so that if a record comes into the staging area for a product that does not exist in the DIM_PRODUCT table, that record will not be excluded from the fact table. It will exist with a value of -1 and will need to be addressed after the ETL has run.

2.) The STAGE_TRANS_DATA_DATE_KEY table is used to transform the TRANSACTION_DATE into a key that will join to a date dimension. I created the date dimension on my own, so I'll let you try and tackle that one. Those dimensions typically have no source and are created as needed by the ETL developer. Again, an outer join is used so that if a date does not exist in that dimension a value of -1 is placed into the fact table.

Load

1.) This fact table, because it is so small, is truncated and then repopulated each time that the ETL runs. Date logic can be written so that only a certain time period will be refreshed with each run, but for simplicity's sake I did it this way.

2.) Finally, the data is loaded into the FACT_TRANSACTION table.

Prior posts have talked about turning data into valuable data. This post shows you how to accomplish that in a hands-on way.

Remember to take a look at www.brianciampa.com (specifically, click on Career In Data Warehousing and then Grow) for larger datasets that can be used for this kind of practice.

Image: FreeDigitalPhotos.net

1.) Select all necessary data from the source

a. Measures

b. Natural Keys of the dimension objects

c. Degenerate Dimension objects

2.) Transform the data as needed

a. Aggregate measures and group by the appropriate objects

b. Add foreign keys to dimension tables

3.) Delete any existing rows from the fact table that will be replaced by the new data

4.) Load the fact table

Consider this PL/SQL procedure as a procedure that will accomplish these things.

Extract

The STAGE_TRANSACTION_DATA table pulls all of the necessary elements from the source system into the staging area. I am treating one schema as both the staging area and the presentation area. This is probably unrealistic in a production environment but it keeps things simple for these purposes.

Transform

1.) The STAGE_TRANS_DATA_PROD_KEY table is used to grab the KEY_PRODUCT from the DIM_PRODUCT dimension. Notice that this is done by joining the product's natural key, which is the PRODUCT_NO (product number), to the DIM_PRODUCT table. An outerjoin is used so that if a record comes into the staging area for a product that does not exist in the DIM_PRODUCT table, that record will not be excluded from the fact table. It will exist with a value of -1 and will need to be addressed after the ETL has run.

2.) The STAGE_TRANS_DATA_DATE_KEY table is used to transform the TRANSACTION_DATE into a key that will join to a date dimension. I created the date dimension on my own, so I'll let you try and tackle that one. Those dimensions typically have no source and are created as needed by the ETL developer. Again, an outer join is used so that if a date does not exist in that dimension a value of -1 is placed into the fact table.

Load

1.) This fact table, because it is so small, is truncated and then repopulated each time that the ETL runs. Date logic can be written so that only a certain time period will be refreshed with each run, but for simplicity's sake I did it this way.

2.) Finally, the data is loaded into the FACT_TRANSACTION table.

Prior posts have talked about turning data into valuable data. This post shows you how to accomplish that in a hands-on way.

Remember to take a look at www.brianciampa.com (specifically, click on Career In Data Warehousing and then Grow) for larger datasets that can be used for this kind of practice.

Image: FreeDigitalPhotos.net

Sunday, September 2, 2012

ETL - Practice Loading A Fact

We looked recently at the process used to load a dimension table. Consider this post as a way to practice loading a fact table. An ETL job that is designed to load a fact table will accomplish these things…

1.) Select all necessary data from the source

a. Measures

b. Natural Keys of the dimension objects

c. Degenerate Dimension objects

2.) Transform the data as needed

a. Aggregate measures and group by the appropriate objects

b. Add foreign keys to dimension tables

3.) Delete any existing rows from the fact table that will be replaced by the new data

4.) Load the fact table

As with the dimension example, no two developers will do this the same way, which is perfectly fine. Consider this example which is to be used in conjunction with the two tables used in the ETL – Practice Loading a Dimension post (click on the image to enlarge it)…

…and this fact table needs to be populated.

Run the following scripts in Oracle to create the source data

1.) Product data – This is the same script from the ETL – Practice Loading a Dimension post. If you have already run this one, you are fine.

2.) Transaction data

The TRANSACTION_DETAIL.PRODUCT_ID is a foreign key to the PRODUCT.ID field from the ETL – Practice Loading a Dimension post. If you wish, try to write an ETL job that will populate the FACT_TRANSACTION table in PL/SQL or another language of your choosing. We will examine a possible solution in a future post.

For more practice data and additional information on dimensional modeling visit www.brianciampa.com. Also, if you need a fresh approach to marketing your data warehousing skillset consider The Data Warehouse Portfolio.

For more practice data and additional information on dimensional modeling visit www.brianciampa.com. Also, if you need a fresh approach to marketing your data warehousing skillset consider The Data Warehouse Portfolio.

Friday, August 10, 2012

ETL - Practice Loading a Dimension - Solution

In the previous post we looked at the process used to write a basic ETL job to populate a dimension table. As discussed previously, while no two developers will write one exactly the same way, an ETL job that populates a dimension table will need to accomplish the following...

1.) Extract all of the dimension attributes from the source.

2.) Transform the data according to the requirements (i.e., concatenate first name and last name to create a full name column, etc.)

3.) Identify those records that are new to the table as opposed to those records that already exist in the table and may need to be updated due to updates in the source system

4.) Generate new surrogate key values and add them to the new records

5.) Load the records into the dimension table

Consider this example of an Oracle PL/SQL procedure that will populate the DIM_PRODUCT table. Before running the procedure keep these things in mind...

1.) I made an error in the SQL file from the previous post, so you may want to re-download that and run it again. The DIM_PRODUCT.PRODUCT_EFFECTIVE_FLAG should be named DIM_PRODUCT.PRODUCT_ACTIVE_FLAG.

2.) This job depends on an Oracle sequence to create the surrogate keys. Before running the job, run this statement in your Oracle environment...

CREATE SEQUENCE

SEQ_DIM_PRODUCT

MINVALUE 0

INCREMENT BY 1

START WITH 1;

3.) I'll encourage you not to get too lost in the Oracle syntax. The point is to examine the logical flow of an ETL job. If you have a better way of structuring the Oracle code (or want to use something other than Oracle), then by all means do that.

Run the procedure (after you have created the sample data provided in the previous post, of course) to populate the DIM_PRODUCT dimension. Notice what is happening...

1.) Extract all of the dimension attributes from the source - Everything is pulled from the source system and placed into the STAGE_PRODUCT_EXTRACT table.

2.) Transform the data according to the requirements (i.e., concatenate first name and last name to create a full name column, etc.) - The PRODUCT_ACTIVE_FLAG is derived and that data is placed into the STAGE_PRODUCT_TRANSFORM table.

3.) Identify those records that are new to the table as opposed to those records that already exist in the table and may need to be updated due to updates in the source system - New records are identified (via an outer join) and placed into the STAGE_PRODUCT_LOAD table.

4.) Generate new surrogate key values and add them to the new records - Surrogate keys are created in the STAGE_PRODUCT_LOAD table using the Oracle sequence mentioned earlier.

5.) Load the records into the dimension table - Existing records are updated in the DIM_PRODUCT table and the new records from the STAGE_PRODUCT_LOAD table are loaded.

This job is designed to be run as many times as necessary. Running one time or multiple times should still result in 25 records being placed into the DIM_PRODUCT table. This is a simple example for a few reasons, one of which is that we are working with a very small amount of data. A more complex ETL job may examine the source system's data and somehow determine which records are new and/or have been updated before pulling them into the staging area.

Also, more complex ETL jobs may not have five simple steps, as this one does, to accomplish the five things listed above. It may take several steps to accomplish those five things due to the complexity of the data.

If you wish, change some of the source data to see the updates occur or tweak the ETL job to your liking. By running this ETL job, you have just turned ordinary data into something that is truly valuable.

Image: FreeDigitalPhotos.net

1.) Extract all of the dimension attributes from the source.

2.) Transform the data according to the requirements (i.e., concatenate first name and last name to create a full name column, etc.)

3.) Identify those records that are new to the table as opposed to those records that already exist in the table and may need to be updated due to updates in the source system

4.) Generate new surrogate key values and add them to the new records

5.) Load the records into the dimension table

Consider this example of an Oracle PL/SQL procedure that will populate the DIM_PRODUCT table. Before running the procedure keep these things in mind...

1.) I made an error in the SQL file from the previous post, so you may want to re-download that and run it again. The DIM_PRODUCT.PRODUCT_EFFECTIVE_FLAG should be named DIM_PRODUCT.PRODUCT_ACTIVE_FLAG.

2.) This job depends on an Oracle sequence to create the surrogate keys. Before running the job, run this statement in your Oracle environment...

CREATE SEQUENCE

SEQ_DIM_PRODUCT

MINVALUE 0

INCREMENT BY 1

START WITH 1;

3.) I'll encourage you not to get too lost in the Oracle syntax. The point is to examine the logical flow of an ETL job. If you have a better way of structuring the Oracle code (or want to use something other than Oracle), then by all means do that.

Run the procedure (after you have created the sample data provided in the previous post, of course) to populate the DIM_PRODUCT dimension. Notice what is happening...

1.) Extract all of the dimension attributes from the source - Everything is pulled from the source system and placed into the STAGE_PRODUCT_EXTRACT table.

2.) Transform the data according to the requirements (i.e., concatenate first name and last name to create a full name column, etc.) - The PRODUCT_ACTIVE_FLAG is derived and that data is placed into the STAGE_PRODUCT_TRANSFORM table.

3.) Identify those records that are new to the table as opposed to those records that already exist in the table and may need to be updated due to updates in the source system - New records are identified (via an outer join) and placed into the STAGE_PRODUCT_LOAD table.

4.) Generate new surrogate key values and add them to the new records - Surrogate keys are created in the STAGE_PRODUCT_LOAD table using the Oracle sequence mentioned earlier.

5.) Load the records into the dimension table - Existing records are updated in the DIM_PRODUCT table and the new records from the STAGE_PRODUCT_LOAD table are loaded.

This job is designed to be run as many times as necessary. Running one time or multiple times should still result in 25 records being placed into the DIM_PRODUCT table. This is a simple example for a few reasons, one of which is that we are working with a very small amount of data. A more complex ETL job may examine the source system's data and somehow determine which records are new and/or have been updated before pulling them into the staging area.

Also, more complex ETL jobs may not have five simple steps, as this one does, to accomplish the five things listed above. It may take several steps to accomplish those five things due to the complexity of the data.

If you wish, change some of the source data to see the updates occur or tweak the ETL job to your liking. By running this ETL job, you have just turned ordinary data into something that is truly valuable.

Image: FreeDigitalPhotos.net

Friday, August 3, 2012

ETL - Practice Loading a Dimension

We've been looking recently at the concept of extract, transform, and load (ETL) jobs. This post will begin to examine some of the mechanics. When loading a dimension table the ETL job should accomplish the following things...

1.) Extract all of the dimension attributes from the source.

2.) Transform the data according to the requirements (i.e., concatenate first name and last name to create a full name column, etc.)

3.) Identify those records that are new to the table as opposed to those records that already exist in the table and may need to be updated due to updates in the source system

4.) Generate new surrogate key values and add them to the new records

5.) Load the records into the dimension table

Each ETL job that populates a dimension table will need to accomplish these five things, although no two developers will develop a job exactly the same way. To get a feel for this type of job, consider this very simple example. A source system contains these two tables among others (click the picture to enlarge)...

...and this dimension needs to be populated...

If you have an Oracle environment available to you, run this sql file to create the two source system tables, populate them with data, and create the DIM_PRODUCT table. Each product, as you can see, is grouped under a single product group. The PRODUCT_EFFECTIVE_FLAG indicates whether or not a product is currently effective (current at the time of the ETL job). If you wish, try to write an ETL job that will populate the DIM_PRODUCT table in PL/SQL or another language of your choosing. We will examine a possible solution in a future post.

1.) Extract all of the dimension attributes from the source.

2.) Transform the data according to the requirements (i.e., concatenate first name and last name to create a full name column, etc.)

3.) Identify those records that are new to the table as opposed to those records that already exist in the table and may need to be updated due to updates in the source system

4.) Generate new surrogate key values and add them to the new records

5.) Load the records into the dimension table

Each ETL job that populates a dimension table will need to accomplish these five things, although no two developers will develop a job exactly the same way. To get a feel for this type of job, consider this very simple example. A source system contains these two tables among others (click the picture to enlarge)...

...and this dimension needs to be populated...

If you have an Oracle environment available to you, run this sql file to create the two source system tables, populate them with data, and create the DIM_PRODUCT table. Each product, as you can see, is grouped under a single product group. The PRODUCT_EFFECTIVE_FLAG indicates whether or not a product is currently effective (current at the time of the ETL job). If you wish, try to write an ETL job that will populate the DIM_PRODUCT table in PL/SQL or another language of your choosing. We will examine a possible solution in a future post.

Saturday, April 14, 2012

Practice Using Slowly Changing Dimensions

A few weeks ago we looked at slowly changing dimensions. I've provided a script that will create a table called dim_employee_scd and fact_salary_scd. These are two slightly altered versions of the same tables in the star that we have been using (if you are just joining us, don't worry, they can stand alone too).

In this case, the dim_employee_scd acts as a slowly changing dimension. As always, don't read too much into the dates and/or salary amounts. This data is entirely fictitious. Run the script (here are instructions if needed), play with the data and see if you can answer these questions...

1.) How much did John Farmer receive in salary from Information Systems as opposed to Human Resources?

2.) As a percentage, how many more (or fewer) dollars did James Couch receive in salary when he moved from the first to the second and then from the second to the third position? Remember, even if the numbers decrease although it appears that he was promoted, that's ok. This is fictitious data and the point is to see there there was a change of some sort.

3.) Try to display salary dollars for active only positions, which may require that you make an assumption about the business rule.

We'll look at some solutions in the future.

In this case, the dim_employee_scd acts as a slowly changing dimension. As always, don't read too much into the dates and/or salary amounts. This data is entirely fictitious. Run the script (here are instructions if needed), play with the data and see if you can answer these questions...

1.) How much did John Farmer receive in salary from Information Systems as opposed to Human Resources?

2.) As a percentage, how many more (or fewer) dollars did James Couch receive in salary when he moved from the first to the second and then from the second to the third position? Remember, even if the numbers decrease although it appears that he was promoted, that's ok. This is fictitious data and the point is to see there there was a change of some sort.

3.) Try to display salary dollars for active only positions, which may require that you make an assumption about the business rule.

We'll look at some solutions in the future.

Saturday, March 31, 2012

Practice Using Conformed Dimensions

In the last post we looked at the benefits of having conformed dimensions. If you wish, feel free to play with this yourself. I have two scripts available that will essentially add a fact_salary table to the star that we used for practice a few weeks ago.

- If you created that star in your Oracle instance already and want to simply add the fact_salary table, use this script.

- If you want to create the entire star in your Oracle instance, use this script.

The updated ERD is below (if the image is too small to read, click on it to have it enlarged).

See if you can write some SQL that will allow you to compare the measures between the two fact tables. If you are really into this, try adding a third or fourth fact table of your own (and more dimensions if needed) and doing some additional comparisons. A powerful use of a data warehouse involves comparing measures between multiple business processes to answer questions like…

- How does his productivity growth compare to his salary growth?

- How does this year’s increase in revenue (either in dollars or as a percentage) compare to last year’s increase in dollars allocated to new marketing initiatives?

- How are the number of new work from home opportunities affecting employee turnover?

Using conformed dimensions can help to easily answer questions like these. What was once just a pile of data is now providing valuable information to decision makers.

Saturday, March 10, 2012

Gaining Business Intelligence

In the last post we looked at a hands-on example of analyzing data using a star schema. The questions were…

1.) Who was the highest performer in terms of dollars in the first quarter?

2.) Who was the highest performer in terms of volume in the first quarter?

3.) The business experts speculate that a product may have been applied to a line item with the incorrect dollar amount. Using this star, see if you can identify where that may have happened.

Keeping in mind that there are several ways to analyze the data so that these questions can be answered, here are a few ways that will work.

1.) The highest performer in terms of dollars was James Couch with $3,529.98. This can be answered using this SQL statement…

select c.first_name, c.last_name, sum(a.amount), count(a.transaction_line)

from fact_transaction a,

dim_day b,

dim_employee c,

dim_product d

where a.key_day = b.key_day

and a.key_sales_person = c.key_employee

and a.key_product = d.key_product

and b.calendar_quarter = 1

group by c.first_name, c.last_name

order by 3 desc

2.) The highest performer in terms of volume turned out to be several people. Gabriel Palmer, Norma Manlow, and Arnold Tab all fit that bill with 7. This can also be answered using the SQL statement above by changing the last line to “order by 4 desc”.

3.) This request from the business offered an interesting challenge. It would be possible to export all of the transaction data to a spreadsheet and then ask an analyst to examine it line-by-line to find the item that was misapplied. That option would be very tedious and time consuming. Consider this SQL statement…

select d.product_name, b.calendar_quarter, sum(a.amount)/count(a.transaction_line)

from fact_transaction a,

dim_day b,

dim_employee c,

dim_product d

where a.key_day = b.key_day

and a.key_sales_person = c.key_employee

and a.key_product = d.key_product

group by d.product_name, b.calendar_quarter

order by 1,2

This is examining the average price per product per quarter. Scrolling down the list will show an anomaly with the Men’s Athletic Shoes product in calendar quarter 2. Writing the select statement so that it shows the distinct quarter, product, and amount will essentially lead you to the same conclusion. As I mentioned above, there may be multiple ways to answer each question. Anyway, at this point, independent of which method you choose, more detail can be added to the query so that more information can be gathered. Now that we have identified the portion of the “forest” that appears to be problematic (Men’s Athletic Shoes in quarter 2), we can limit our analysis to the “trees” that make up that portion (the individual transactions). Consider the following SQL statement…

select d.product_name, a.transaction_number, a.transaction_line, sum(a.amount)

from fact_transaction a,

dim_day b,

dim_employee c,

dim_product d

where a.key_day = b.key_day

and a.key_sales_person = c.key_employee

and a.key_product = d.key_product

and b.calendar_quarter = 2

and d.product_name = 'Men''s Athletic Shoes'

group by d.product_name, a.transaction_number, a.transaction_line

Scrolling down shows us that line one of transaction 1345641 has an odd amount associated with it. The source system owners can be alerted that this transaction may need to be investigated (assuming that there is nothing wrong with the ETL job).

While this is a simplistic example (the highest performer only sold 7 items) I hope the point is obvious. Warehousing data allows users to easily find answers to business questions like these. If this is the first time that you have done something like this, welcome to Business Intelligence. If you enjoy this kind of thing, continue to play with the data to see what other conclusions you can draw.

Sunday, March 4, 2012

Seeking Business Intelligence

In a prior post, we looked at a hands-on example of examining data in a star schema. Suppose an analyst on the business side does some manual analysis each quarter to determine who deserves the top performer award. You are interested in showing her how the data warehouse can be used to easily answer this question without the need for a lot of manual analysis. Using this star, see if you can answer these questions…

1.) Who was the highest performer in terms of dollars in the first quarter?

2.) Who was the highest performer in terms of volume in the first quarter?

The value of the data warehouse is rising in the eyes of the business community to the point that it is being used more and more for information. The business experts, after looking at some high-level data, speculate that a product may have been applied to a line item with the incorrect dollar amount. Using this star, see if you can identify where that may have happened. By asking these questions (or others), you are using the data warehouse to seek intelligence about your business or organization.

Spend some time answering these questions and in a future post we will examine the solutions. If you find yourself enjoying this exercise that’s probably a tell-tale sign that you enjoy business intelligence.

Image: digitalart / FreeDigitalPhotos.net

Saturday, February 25, 2012

Practice Makes Perfect

As with any skill, only so much can be learned from a book (or a blog post). In this post, I’m hoping to offer some hands-on training that will allow you to play with some data a bit. If you have an Oracle environment available to you (check the previous post if you do not), you can run the script found here. If you are unfamiliar with running a script like this, some suggestions are in the previous post as well. The following tables with data will be created…

This is a very basic star that holds data related to sales transactions. Here is a quick breakdown of the tables…

Dim_Product (22 rows): This is a dimension table that contains a product’s overall group (larger grouping) and then the individual product numbers and names within that group (smaller grouping).

Dim_Employee (19 rows): This is a dimension table that contains information regarding the employees that sold the products.

Dim_Day (365 rows): This is a date dimension table that contains one row for each day as well as higher-level date groupings. In this example, this table only holds data in calendar year 2011 (1/1/2011 – 12/31/2011).

Fact_Transaction (300 rows): This is a fact table that contains one row for each transaction line item. So, if an individual purchased three items on the same transaction (i.e., brought three items to the cash register) that transaction will produce three rows in this fact table.

Data Warehousing is ideal for top-down analysis. Once the script has been run in Oracle, and the tables have been created, consider the following example…

select b.calendar_year, sum(a.amount)

from fact_transaction a,

dim_day b,

dim_employee c,

dim_product d

where a.key_day = b.key_day

and a.key_sales_person = c.key_employee

and a.key_product = d.key_product

group by b.calendar_year

This select statement shows the dollars sold per fiscal year, although there is only one year in this case. If you want to throw in the product_group to see the dollars sold per year, per product_group, that will give you an additional level of detail…

select b.calendar_year, d.product_group, sum(a.amount)

from fact_transaction a,

dim_day b,

dim_employee c,

dim_product d

where a.key_day = b.key_day

and a.key_sales_person = c.key_employee

and a.key_product = d.key_product

group by b.calendar_year, d.product_group

Do you see what is happening? You can continue drilling down until you get to a specific transaction_line if you wish.

An analyst may examine the data at a high-level and notice that one quarter had an enormous number of sales dollars. She can keep adding descriptors to the select clause (and group by them) to eventually find where those dollars lie. Did one sales rep have a stellar quarter? Did one product group do exceptionally well? Did sales go through the roof on one particular day out of the quarter? Did one product alone do exceptionally well? Once you have the information you can turn that into action. Does somebody deserve a raise for their extraordinary work? Did one marketing strategy work remarkably well, meaning that we need to consider implementing it across the entire organization?

This type of structure can turn data into valuable data. Valuable data can enable great decisions. Have fun playing with the data. See how many ways you can slice and dice it. We will do some more with this star in future posts.

Sunday, February 19, 2012

Hands-On Training

As with anything else, the way to become familiar with data management is to constantly work with data. I would like to offer some of that through this blog, in addition to continuing to examine some of the concepts of data warehousing. I plan to provide scripts that will create data in your own Oracle instance so that you can practice working with it. If you do not have access to (and permission to use) an Oracle instance through your employer, your school, or some other entity, one option is to use a couple of free products explained below.

Oracle offers a free product called Oracle Database Express Edition that, among other things, allows users to become familiar with data management. This is a smaller version of the Oracle database that can be loaded onto a local machine running Windows or Linux. Oracle Database Express Edition can be downloaded here.

Oracle also offers a free integrated development environment (IDE) called Oracle SQL Developer that allows you to easily query data and build database objects. Oracle SQL Developer can be downloaded here.

Oracle also offers a free integrated development environment (IDE) called Oracle SQL Developer that allows you to easily query data and build database objects. Oracle SQL Developer can be downloaded here.

As a test, download the very simple sql script here. This will create one basic table and insert three records. Here are two ways of running it…

From an IDE - Open the file from an IDE (such as Oracle SQL Developer although others may be used as well) and run the script. In Oracle SQL Developer, this involves clicking the Run Script button on the tool bar or pressing F5.

Using SQLPlus (in Windows)

1.) On the machine on which Oracle is installed go to the windows command line (from the Run box type CMD).

2.) Navigate to the folder that contains the script using the cd syntax.

3.) Type the following: sqlplus {schema name}/{password} @{filename} So, if you are loading this into a schema called “myschema” with a password being “mypassword”, the command would look like this: sqlplus myschema/mypassword @test_tbl Including the “.sql” extension with the filename is not necessary.

4.) Press Enter.

You should see that one table was created, three rows were created, and the transactions were committed. You can simply drop the test_tbl table at this point. We will still be looking at some of the theory behind data warehousing, but along with that I’ll provide some paths for hands-on training. I don’t want to get lost in the mechanics of getting the data into the database, so if you have a better way of loading a script like this, by all means use it (and share with us if you would like).

Find an Oracle environment that you works well for you, or create one using the products above (or something else if you like). In the next post or two, I’ll provide a star schema that we can look at.

As an aside, I’m not trying to endorse Oracle as being a better product for building a data warehouse than something else. My experience is with this product so it is the easiest and quickest way for me to share data in this way. If you would like to take my scripts and update them so that they are appropriate for another product, feel absolutely free to do that.

Image: Ambro / FreeDigitalPhotos.net

Image: Ambro / FreeDigitalPhotos.net

Subscribe to:

Posts (Atom)