One of the challenges of writing ETL involves deciding which records to pull from the source system. If a salary star schema is built to contain payroll data, then the ETL job will refresh on a recurring basis to add new data to that star. If some data related to salary dollars already exists in the star and some new data makes it into the source system, how can the ETL job know to load the new data into the star and avoid reloading the records that were previously loaded (since they already exist in the data warehouse)? There is no one way of doing this, but consider these options...

1.) Use the source system's datetime stamps - An ETL developer should consider himself very blessed if his source system contains an insert and update datetime stamp for each record in the system. If this is the case, then he can simply store the date and time of the most recent successful extract and then pull everything that has been inserted or updated since then. It will be important for the ETL developer to understand how those audit columns are populated in each table to make sure that necessary data will not be left out and unnecessary data will not be included due to odd occurances (i.e., a source system table is reloaded for some reason and records that pertain to data that was initially added three years ago have yesterday's timestamp, etc.).

2.) Compare to the prior extract - In his book The Data Warehouse ETL Toolkit : Practical Techniques for Extracting, Cleaning, Conforming, and Delivering Data, Ralph Kimball suggests always saving the prior extract in the staging area. A new extract can be compared to this prior extract and the differences will be uploaded. Finally the new extract will overwrite the prior extract and be used as the baseline for the next update.

3.) Always pull as of a certain date - In this approach, the salary dollars may always be extracted for the current calendar year (for example). So, as the year goes on each extraction will be slightly larger than the last. In April, all salary dollars that date back to January will be extracted from the source. In May, all salary dollars that date back to January will be extracted from the source as well. When using this option in conjunction with loading a fact table, the ETL developer will need to delete all of the applicable year's records from the fact table and then load the extract. Although several records will be deleted and simply reloaded (with no change), this is one way of grabbing what is needed from the source when a simpler solution is not possible. However, any updates made to the data that pertain to a different year will be missed. This will need to be taken into consideration. When using this option in conjunction with loading a dimension table, the ETL developer will need to compare to the dimension table to see if any of the dimensions have changed and only load or update the changes. Deleting records from the dimension table and reloading is not a good strategy since the fact table has foreign keys that reference the dimension table.

4.) Refresh the fact table - In this case all of the data from the source system will always be extracted and the fact table will be truncated and reloaded each time. This will only be an acceptable solution if the fact table can be loaded in a timely fashion. Also, this will only be acceptable with a fact table most of the time. Even if an ETL developer plans to refresh an entire star (fact and dimension tables) she must consider the possibility that some of these dimensions are conformed dimensions, meaning that other fact tables reference them.

There are obviously more options than these four. With whatever option you choose, it is important to build the job so that it can be run multiple times with no negative impact. In other words, running the job twice should not result in duplicate records showing up. Even though the initial extract may place more records than are needed into the staging area, the job should be "smart enough" to know exactly what to load into the data warehouse and what to exclude.

For more information on data warehousing techniques as well as data with which you can practice writing your own ETL, visit www.brianciampa.com/careerindatawarehousing/grow.html. Also, if you need a fresh approach to marketing your skillset, consider The Data Warehouse Portfolio.

Image courtesy of digitalart / FreeDigitalPhotos.net

Friday, September 28, 2012

Friday, September 21, 2012

To Constrain or Not Constrain

One of the advantages of using a relational database is that you can mandate that certain relationships MUST exist within your data. If somebody tries to enter data into the database that does not relate to other data correctly, the database can be configured to reject that "bad data" until it is corrected. Since many data warehouses reside in relational databases, using this feature is an option for the data warehousing team as well.

Consider this example...suppose that a star contains a DIM_PRODUCT table that contains the products that are used by that business process. If the Men's Raincoat product has a KEY_PRODUCT value of 27 (surrogate key) then the records in the corresponding fact tables that pertain to this product will have a KEY_PRODUCT value of 27 (foreign key). That's a lesson from Database 101. If somebody removes the Men's Raincoat product from the DIM_PRODUCT table while records that point to it exist in the fact table, then those fact table records will point to nothing...and become meaningless.

The advantage of enforcing this constraint within the database itself is that if somebody tries to remove the Men's Raincoat product from the DIM_PRODUCT table the database will not allow it until the "child records" from the fact table have been deleted or repointed. Using this feature sounds like a no-brainer (and it may be) but a data warehouse provides an additional twist to this kind of decision since so much data is being inserted.

Option 1: Enforce Constraints - This will ensure that the relationship between the fact and dimension tables are always valid from a technical perspective. However, each time that the data is loaded via the ETL job, the fact table must look to make sure that a parent record exists in the dimension. If (and only if) it exists, it will load that record into the fact. Doing that for each and every record will ensure good data intregity but it can also slow a job down.

Option 2: Do Not Enforce Constraints - This will probably result in a faster and more efficient ETL job. However, the possiblity of the fact table containing some orphan records exists.

It is up to each data warehousing team to decide which is best for their particular situation. In some cases it is appropriate to physically enforce constraints (option 1). In other cases, it may be appropriate to logically enforce constraints (option 2), meaning that the data warehousing team will need to periodically run SQL statements that specifically look for orphaned records.

If you are looking for more information on data warehousing and/or data that can be used to practice ETL and architecture skills, visit www.brianciampa.com and click on Career in Data Warehousing. Also, if you are looking for a fresh way to market your data warehousing skills, consider The Data Warehouse Portfolio.

Image: FreeDigitalPhotos.net

Consider this example...suppose that a star contains a DIM_PRODUCT table that contains the products that are used by that business process. If the Men's Raincoat product has a KEY_PRODUCT value of 27 (surrogate key) then the records in the corresponding fact tables that pertain to this product will have a KEY_PRODUCT value of 27 (foreign key). That's a lesson from Database 101. If somebody removes the Men's Raincoat product from the DIM_PRODUCT table while records that point to it exist in the fact table, then those fact table records will point to nothing...and become meaningless.

The advantage of enforcing this constraint within the database itself is that if somebody tries to remove the Men's Raincoat product from the DIM_PRODUCT table the database will not allow it until the "child records" from the fact table have been deleted or repointed. Using this feature sounds like a no-brainer (and it may be) but a data warehouse provides an additional twist to this kind of decision since so much data is being inserted.

Option 1: Enforce Constraints - This will ensure that the relationship between the fact and dimension tables are always valid from a technical perspective. However, each time that the data is loaded via the ETL job, the fact table must look to make sure that a parent record exists in the dimension. If (and only if) it exists, it will load that record into the fact. Doing that for each and every record will ensure good data intregity but it can also slow a job down.

Option 2: Do Not Enforce Constraints - This will probably result in a faster and more efficient ETL job. However, the possiblity of the fact table containing some orphan records exists.

It is up to each data warehousing team to decide which is best for their particular situation. In some cases it is appropriate to physically enforce constraints (option 1). In other cases, it may be appropriate to logically enforce constraints (option 2), meaning that the data warehousing team will need to periodically run SQL statements that specifically look for orphaned records.

If you are looking for more information on data warehousing and/or data that can be used to practice ETL and architecture skills, visit www.brianciampa.com and click on Career in Data Warehousing. Also, if you are looking for a fresh way to market your data warehousing skills, consider The Data Warehouse Portfolio.

Image: FreeDigitalPhotos.net

Friday, September 14, 2012

Bridge Tables

Each of the examples that we've used thus far in this blog have involved situations in which each fact row was associated with only on dimension row, per dimension table. In other words there has always been a many-to-one relationship between the fact table and its associated dimensions. In the real world, this relationship will not always be the case.

Suppose that a fact table contains employee salary data and each employee can be associated with multiple departments at once. Conceptually, the design would be something like this...

One possible way of resolving this is to use what some people call a bridge table. This is essentially a crosswalk between the fact and dimension designed to resolve the many-to-many problem. The design for such a table is below.

The EMPLOYEE_DEPT_BRIDGE table will not contain any data that will be seen in query results. It will only be used as a link between the FACT_SALARY and the DIM_DEPARTMENT tables. However, there is a rule that must be well understood by any and all analysts that select from this table: You must always group by the department in your query results if your select statement joins to the DIM_DEPARTMENT table (via the bridge, of course) in any way. For example, suppose that John Smith (KEY_EMPLOYEE value is 123) has $1,000 in wages and is associated with both accounting and human resources (part time in each, perhaps). Consider this SQL statement...

select a.key_employee_name,

sum(a.wage_amount)

from fact_salary a,

employee_dept_bridge b,

dim_department c

where a.key_employee = b.key_employee

and b.key_department = c.key_department

and a.key_employee = 123

group by a.key_employee_name

Because the EMPLOYEE_DEPT_BRIDGE table will contain two rows for John Smith (one for accounting and one for human resources), these results will show a salary of $2,000 which will appear incorrect to the user.

Now, consider this SQL statement...

select a.key_employee_name,

department_name,

sum(a.wage_amount)

from fact_salary a,

employee_dept_bridge b,

dim_department c

where a.key_employee = b.key_employee

and b.key_department = c.key_department

and a.key_employee = 123

group by a.key_employee_name,

department_name

The results will show his $1,000 salary associated with each department. This will appear to be more correct to the user, assuming they understand that he is associated with both departments.

In this case, educating your analysts and report writers to use this table correctly is a key component to the success of this design.

Suppose that a fact table contains employee salary data and each employee can be associated with multiple departments at once. Conceptually, the design would be something like this...

One possible way of resolving this is to use what some people call a bridge table. This is essentially a crosswalk between the fact and dimension designed to resolve the many-to-many problem. The design for such a table is below.

The EMPLOYEE_DEPT_BRIDGE table will not contain any data that will be seen in query results. It will only be used as a link between the FACT_SALARY and the DIM_DEPARTMENT tables. However, there is a rule that must be well understood by any and all analysts that select from this table: You must always group by the department in your query results if your select statement joins to the DIM_DEPARTMENT table (via the bridge, of course) in any way. For example, suppose that John Smith (KEY_EMPLOYEE value is 123) has $1,000 in wages and is associated with both accounting and human resources (part time in each, perhaps). Consider this SQL statement...

select a.key_employee_name,

sum(a.wage_amount)

from fact_salary a,

employee_dept_bridge b,

dim_department c

where a.key_employee = b.key_employee

and b.key_department = c.key_department

and a.key_employee = 123

group by a.key_employee_name

Because the EMPLOYEE_DEPT_BRIDGE table will contain two rows for John Smith (one for accounting and one for human resources), these results will show a salary of $2,000 which will appear incorrect to the user.

| KEY_EMPLOYEE | SUM(A.WAGE_AMT) |

| 123 | 2,000 |

Now, consider this SQL statement...

select a.key_employee_name,

department_name,

sum(a.wage_amount)

from fact_salary a,

employee_dept_bridge b,

dim_department c

where a.key_employee = b.key_employee

and b.key_department = c.key_department

and a.key_employee = 123

group by a.key_employee_name,

department_name

The results will show his $1,000 salary associated with each department. This will appear to be more correct to the user, assuming they understand that he is associated with both departments.

| KEY_EMPLOYEE | DEPARTMENT_NAME | SUM(A.WAGE_AMT) |

| 123 | ACCOUNTING | 1,000 |

| 123 | HUMAN RESOURCES | 1,000 |

In this case, educating your analysts and report writers to use this table correctly is a key component to the success of this design.

Friday, September 7, 2012

ETL - Practice Loading A Fact - Solution

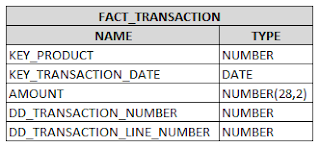

In a prior post we looked at the process by which a fact table is loaded. I need to apologize, since the ERD of the FACT_TRANSACTION table that I posted in that prior post was incorrect. It has since been updated. The four things that an ETL job needs to accomplish are...

1.) Select all necessary data from the source

a. Measures

b. Natural Keys of the dimension objects

c. Degenerate Dimension objects

2.) Transform the data as needed

a. Aggregate measures and group by the appropriate objects

b. Add foreign keys to dimension tables

3.) Delete any existing rows from the fact table that will be replaced by the new data

4.) Load the fact table

Consider this PL/SQL procedure as a procedure that will accomplish these things.

Extract

The STAGE_TRANSACTION_DATA table pulls all of the necessary elements from the source system into the staging area. I am treating one schema as both the staging area and the presentation area. This is probably unrealistic in a production environment but it keeps things simple for these purposes.

Transform

1.) The STAGE_TRANS_DATA_PROD_KEY table is used to grab the KEY_PRODUCT from the DIM_PRODUCT dimension. Notice that this is done by joining the product's natural key, which is the PRODUCT_NO (product number), to the DIM_PRODUCT table. An outerjoin is used so that if a record comes into the staging area for a product that does not exist in the DIM_PRODUCT table, that record will not be excluded from the fact table. It will exist with a value of -1 and will need to be addressed after the ETL has run.

2.) The STAGE_TRANS_DATA_DATE_KEY table is used to transform the TRANSACTION_DATE into a key that will join to a date dimension. I created the date dimension on my own, so I'll let you try and tackle that one. Those dimensions typically have no source and are created as needed by the ETL developer. Again, an outer join is used so that if a date does not exist in that dimension a value of -1 is placed into the fact table.

Load

1.) This fact table, because it is so small, is truncated and then repopulated each time that the ETL runs. Date logic can be written so that only a certain time period will be refreshed with each run, but for simplicity's sake I did it this way.

2.) Finally, the data is loaded into the FACT_TRANSACTION table.

Prior posts have talked about turning data into valuable data. This post shows you how to accomplish that in a hands-on way.

Remember to take a look at www.brianciampa.com (specifically, click on Career In Data Warehousing and then Grow) for larger datasets that can be used for this kind of practice.

Image: FreeDigitalPhotos.net

1.) Select all necessary data from the source

a. Measures

b. Natural Keys of the dimension objects

c. Degenerate Dimension objects

2.) Transform the data as needed

a. Aggregate measures and group by the appropriate objects

b. Add foreign keys to dimension tables

3.) Delete any existing rows from the fact table that will be replaced by the new data

4.) Load the fact table

Consider this PL/SQL procedure as a procedure that will accomplish these things.

Extract

The STAGE_TRANSACTION_DATA table pulls all of the necessary elements from the source system into the staging area. I am treating one schema as both the staging area and the presentation area. This is probably unrealistic in a production environment but it keeps things simple for these purposes.

Transform

1.) The STAGE_TRANS_DATA_PROD_KEY table is used to grab the KEY_PRODUCT from the DIM_PRODUCT dimension. Notice that this is done by joining the product's natural key, which is the PRODUCT_NO (product number), to the DIM_PRODUCT table. An outerjoin is used so that if a record comes into the staging area for a product that does not exist in the DIM_PRODUCT table, that record will not be excluded from the fact table. It will exist with a value of -1 and will need to be addressed after the ETL has run.

2.) The STAGE_TRANS_DATA_DATE_KEY table is used to transform the TRANSACTION_DATE into a key that will join to a date dimension. I created the date dimension on my own, so I'll let you try and tackle that one. Those dimensions typically have no source and are created as needed by the ETL developer. Again, an outer join is used so that if a date does not exist in that dimension a value of -1 is placed into the fact table.

Load

1.) This fact table, because it is so small, is truncated and then repopulated each time that the ETL runs. Date logic can be written so that only a certain time period will be refreshed with each run, but for simplicity's sake I did it this way.

2.) Finally, the data is loaded into the FACT_TRANSACTION table.

Prior posts have talked about turning data into valuable data. This post shows you how to accomplish that in a hands-on way.

Remember to take a look at www.brianciampa.com (specifically, click on Career In Data Warehousing and then Grow) for larger datasets that can be used for this kind of practice.

Image: FreeDigitalPhotos.net

Wednesday, September 5, 2012

Tales of Work

I typically do not blog in the middle of the week, but this week I'll make an exception. Kimanzi Constable is a career coach who maintains a great blog, called Tales of Work, on discovering your talents and finding work that you enjoy. He has been gracious enough to run a guest post that I wrote on the portfolio concept. You can check out the guest post here.

My blog is built on the premise that data warehousing and business intelligence are about helping decision makers to see the true value in their data. Those decision makers are best helped by those who are passionate about data. That best describes me, which is why I maintain the Valuable Data blog in hopes of helping other passionate people to hone that skill. If you find yourself needing some help in finding your passion, consider the Tales of Work blog.

Image: Courtesy of FreeDigitalPhotos.net

My blog is built on the premise that data warehousing and business intelligence are about helping decision makers to see the true value in their data. Those decision makers are best helped by those who are passionate about data. That best describes me, which is why I maintain the Valuable Data blog in hopes of helping other passionate people to hone that skill. If you find yourself needing some help in finding your passion, consider the Tales of Work blog.

Image: Courtesy of FreeDigitalPhotos.net

Sunday, September 2, 2012

ETL - Practice Loading A Fact

We looked recently at the process used to load a dimension table. Consider this post as a way to practice loading a fact table. An ETL job that is designed to load a fact table will accomplish these things…

1.) Select all necessary data from the source

a. Measures

b. Natural Keys of the dimension objects

c. Degenerate Dimension objects

2.) Transform the data as needed

a. Aggregate measures and group by the appropriate objects

b. Add foreign keys to dimension tables

3.) Delete any existing rows from the fact table that will be replaced by the new data

4.) Load the fact table

As with the dimension example, no two developers will do this the same way, which is perfectly fine. Consider this example which is to be used in conjunction with the two tables used in the ETL – Practice Loading a Dimension post (click on the image to enlarge it)…

…and this fact table needs to be populated.

Run the following scripts in Oracle to create the source data

1.) Product data – This is the same script from the ETL – Practice Loading a Dimension post. If you have already run this one, you are fine.

2.) Transaction data

The TRANSACTION_DETAIL.PRODUCT_ID is a foreign key to the PRODUCT.ID field from the ETL – Practice Loading a Dimension post. If you wish, try to write an ETL job that will populate the FACT_TRANSACTION table in PL/SQL or another language of your choosing. We will examine a possible solution in a future post.

For more practice data and additional information on dimensional modeling visit www.brianciampa.com. Also, if you need a fresh approach to marketing your data warehousing skillset consider The Data Warehouse Portfolio.

For more practice data and additional information on dimensional modeling visit www.brianciampa.com. Also, if you need a fresh approach to marketing your data warehousing skillset consider The Data Warehouse Portfolio.

Subscribe to:

Posts (Atom)